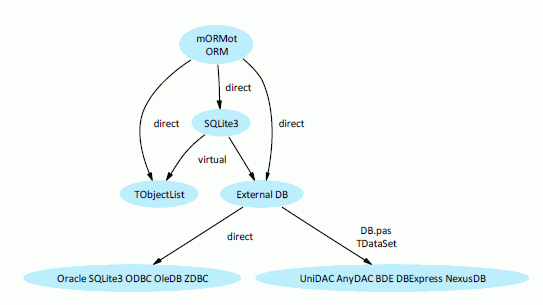

Our mORMot framework tries to implement security via:

- Process safety;

- Authentication;

- Authorization.

Process safety is implemented at every n-Tier level:

- Atomicity of the SQLite3 database core;

- RESTful architecture to avoid most synchronization issues;

- ORM associated to the Object pascal strong type syntax;

- Extended test coverage of the framework core.

Authentication allows user identification:

- Build-in optional authentication mechanism, implementing both per-user

sessions and individual REST Query Authentication;

- Authentication groups are used for proper authorization;

- Several authentication schemes, from very secure SHA-256 based challenging to

weak but simple authentication;

- Class-based architecture, allowing custom extension.

Authorization of a given process is based on the group policy,

after proper authentication:

- Per-table access right functionalities built-in at lowest level of

the framework;

- Per-method execution policy for interface-based services;

- General high-level security attributes, for SQL or Service remote

execution.

We will now give general information about both authentication and

authorization in the framework.

In particular, authentication is now implemented via a set of classes.

Authentication

Extracted from Wikipedia:

Authentication (from Greek: "real" or "genuine", from "author") is the

act of confirming the truth of an attribute of a datum or entity. This might

involve confirming the identity of a person or software program, tracing the

origins of an artifact, or ensuring that a product is what its packaging and

labeling claims to be. Authentication often involves verifying the validity of

at least one form of identification.

Principles

How to handle authentication in a RESTful Client-Server architecture is a

matter of debate.

Commonly, it can be achieved, in the SOA over HTTP world via:

- HTTP basic auth over HTTPS;

- Cookies and session management;

- Query Authentication with additional signature parameters.

We'll have to adapt, or even better mix those techniques, to match our

framework architecture at best.

Each authentication scheme has its own PROs and CONs, depending on the

purpose of your security policy and software architecture:

| Criteria | HTTPS basic auth | Cookies+Session | Query Auth. |

| Browser integration | Native | Native | Via JavaScript |

| User Interaction | Rude | Custom | Custom |

Web Service use

(rough estimation) | 95% | 4% | 1% |

| Session handling | Yes | Yes | No |

| Session managed by | Client | Server | N/A |

| Password on Server | Yes | Yes/No | N/A |

| Truly Stateless | Yes | No | Yes |

| Truly RESTful | No | No | Yes |

| HTTP-free | No | No | Yes |

HTTP basic auth over HTTPS

This first solution, based on the standard HTTPS protocol, is used by most

web services. It's easy to implement, available by default on all browsers, but

has some known draw-backs, like the awful authentication window displayed on

the Browser, which will persist (there is no LogOut-like feature

here), some server-side additional CPU consumption, and the fact that the

user-name and password are transmitted (over HTTPS) into the Server (it should

be more secure to let the password stay only on the client side, during

keyboard entry, and be stored as secure hash on the Server).

The supplied TSQLHttpClientWinHTTP and

TSQLHttpClientWinINet clients classes are able to connect using

HTTPS, and the THttpApiServer server class can send compatible

content.

Session via Cookies

To be honest, a session managed on the Server is not truly Stateless. One

possibility could be to maintain all data within the cookie content. And, by

design, the cookie is handled on the Server side (Client in fact don’t even try

to interpret this cookie data: it just hands it back to the server on each

successive request). But this cookie data is application state data, so the

client should manage it, not the server, in a pure Stateless world.

The cookie technique itself is HTTP-linked, so it's not truly RESTful, which

should be protocol-independent. Since our framework does not provide only HTTP

protocol, but offers other ways of transmission, Cookies were left at the

baker's home.

Query Authentication

Query Authentication consists in signing each RESTful request via

some additional parameters on the URI. See

http://broadcast.oreilly.com/2009/12/principles-for-standardized-rest-authentication.html

about this technique. It was defined as such in this article:

All REST queries must be authenticated by signing the query parameters

sorted in lower-case, alphabetical order using the private credential as the

signing token. Signing should occur before URI encoding the query

string.

For instance, here is a generic URI sample from the link above:

GET /object?apiKey=Qwerty2010

should be transmitted as such:

GET /object?timestamp=1261496500&apiKey=Qwerty2010&signature=abcdef0123456789

The string being signed is

"/object?apikey=Qwerty2010×tamp=1261496500" and the signature is

the SHA256 hash of that string using the private component of the API

key.

This technique is perhaps the more compatible with a Stateless architecture,

and can also been implemented with a light session management.

Server-side data caching is always available. In our framework, we cache the

responses at the SQL level, not at the URI level (thanks to our optimized

implementation of GetJSONObjectAsSQL, the URI to SQL conversion is

very fast). So adding this extra parameter doesn't break the cache

mechanism.

Framework authentication

Even if, theoretically speaking, Query Authentication sounds to be

the better for implementing a truly RESTful architecture, our framework tries

to implement a Client-Server design.

In practice, we may consider two way of using it:

- With no authentication nor user right management (e.g. for local access of

data, or framework use over a secured network);

- With per-user authentication and right management via defined security

groups, and a per-query authentication.

According to RESTful principle, handling per-session data is not to be

implemented in such architecture. A minimal "session-like" feature was

introduced only to handle user authentication with very low overhead on both

Client and Server side. The main technique used for our security is therefore

Query Authentication, i.e. a per-URI signature.

If both AuthGroup and AuthUser are not available

on the Server TSQLModel (i.e. if the

aHandleUserAuthentication parameter was set to false

for the TSQLRestServer. Create constructor), no authentication is

performed. All tables will be accessible by any client, as stated in 19. As

stated above, for security reasons, the ability to execute INSERT /

UPDATE / DELETE SQL statement via a RESTful POST command is

never allowed by default with remote connections: only SELECT can

be executed via this POST verb.

On the Server side, a dedicated service, accessible via the

ModelRoot/Auth URI is to be called to register an User, and create

a session.

If authentication is enabled for the Client-Server process (i.e. if both

AuthGroup and AuthUser are available in the Server

TSQLModel, and the aHandleUserAuthentication

parameter was set to true at the TSQLRestServer

instance construction), the following security features will be added:

- Client should open a session to access to the Server, and provide a

valid UserName / Password pair (see next paragraph);

- Each CRUD statement is checked against the authenticated User security group,

via the AccessRights column and its GET / POST / PUT /

DELETE per-table bit sets;

- Thanks to Per-User authentication, any SQL statement commands may be

available via the RESTful POST verb for an user with its

AccessRights group field containing

AllowRemoteExecute=true;

- Each REST request will expect an additional parameter, named

session_signature, to every URL. Using the URI instead of

cookies allows the signature process to work with all communication

protocols, not only HTTP.

Per-User authentication

On the Server side, two tables, defined by the TSQLAuthGroup

and TSQLAuthUser classes will handle respectively per-group access

rights, and user authentication.

The corresponding AccessRights column is a textual CSV

serialization of the TSQLAccessRights record content, as expected

by the TSQLRestServer.URI method. Using a CSV serialization,

instead of a binary serialization, will allow the change of the

MAX_SQLTABLES constant value.

The AuthUser table, as defined by the TSQLAuthUser

class type.

Each user has therefore its own associated AuthGroup table, a

name to be entered at login, a name to be displayed on screen or reports, and a

SHA-256 hash of its registered password. A custom Data BLOB field

is specified for your own application use, but not accessed by the

framework.

By default, the following security groups are created on a void

database:

| AuthGroup | POST SQL | Auth Read | Auth Write | Tables R | Tables W |

| Admin | Yes | Yes | Yes | Yes | Yes |

| Supervisor | No | Yes | No | Yes | Yes |

| User | No | No | No | Yes | Yes |

| Guest | No | No | No | Yes | No |

Then the corresponding 'Admin', 'Supervisor' and

'User' AuthUser accounts are created, with the default

'synopse' password.

You MUST override those default 'synopse' passwords for

each AuthUser record to a custom genuine value.

'Admin' will be the only group able to execute remote not SELECT

SQL statements for POST commands (i.e. to have TSQLAccessRights.

AllowRemoteExecute = true) and modify the Auth* tables

(i.e. AuthUser and AuthGroup) content.

A typical JSON representation of the default security user/group definitions

are the following:

[{"AuthUser":[

{"RowID":1,"LogonName":"Admin","DisplayName":"Admin","PasswordHashHexa":"67aeea294e1cb515236fd7829c55ec820ef888e8e221814d24d83b3dc4d825dd","GroupRights":1,"Data":null},

{"RowID":2,"LogonName":"Supervisor","DisplayName":"Supervisor","PasswordHashHexa":"67aeea294e1cb515236fd7829c55ec820ef888e8e221814d24d83b3dc4d825dd","GroupRights":2,"Data":null},

{"RowID":3,"LogonName":"User","DisplayName":"User","PasswordHashHexa":"67aeea294e1cb515236fd7829c55ec820ef888e8e221814d24d83b3dc4d825dd","GroupRights":3,"Data":null}]},

{"AuthGroup":[

{"RowID":1,"Ident":"Admin","SessionTimeout":10,"AccessRights":"11,1-256,0,1-256,0,1-256,0,1-256,0"},

{"RowID":2,"Ident":"Supervisor","SessionTimeout":60,"AccessRights":"10,1-256,0,3-256,0,3-256,0,3-256,0"},

{"RowID":3,"Ident":"User","SessionTimeout":60,"AccessRights":"10,3-256,0,3-256,0,3-256,0,3-256,0"},

{"RowID":4,"Ident":"Guest","SessionTimeout":60,"AccessRights":"0,3-256,0,0,0,0"}]}]

Of course, you can change AuthUser and AuthGroup

table content, to match your security requirements, and application

specifications. You can specify a per-table CRUD access, via the

AccessRights column, as we stated above, speaking about the

TSQLAccessRights record layout.

This will implement both Query Authentication together with a

group-defined per-user right management.

Session handling

A dedicated RESTful service, available from the ModelRoot/Auth

URI, is to be used for user authentication, handling so called sessions.

In mORMot, a very light in-memory set of sessions is

implemented:

- The unique ModelRoot/Auth URI end-point will create a session

after proper authorization;

- In-memory session allows very fast process and reactivity, on Server

side;

- An integersession identifier is used for all

authorization process, independently from the underlying authentication scheme

(i.e. mORMot is not tied to cookies, and its session process is much

more generic).

Those sessions are in-memory TAuthSession class instances. Note

that this class does not inherit from a TSQLRecord table so won't

be remotely accessible, for performance and security reasons.

The server methods should not have to access those instances directly, but

rely on the SessionID identifier. The only access available is via

the function TSQLRestServer.SessionGetUser(aSessionID: Cardinal):

TSQLAuthUser method.

When the Client is about to close (typically in

TSQLRestClientURI.Destroy), a GET

ModelRoot/auth?UserName=...&Session=... request is sent to the

remote server, in order to explicitly close the corresponding session in the

server memory (avoiding most re-play attacks).

Note that each opened session has an internal TimeOut parameter

(retrieved from the associated TSQLAuthGroup table content): after

some time of inactivity, sessions are closed on the Server Side.

In addition, sessions are used to manage safe cross-client

transactions:

- When a transaction is initiated by a client, it will store the corresponding

client Session ID, and use it to allow client-safe writing;

- Any further write to the DB (Add/Update/Delete) will be accessible only from

this Session ID, until the transaction is released (via commit or

rollback);

- If a transaction began and another client session try to write on the DB, it

will wait until the current transaction is released - a timeout may occur if

the server is not able to acquire the write status within some time;

- This global write locking is implemented in the TSQLRest.AcquireWrite /

ReleaseWrite protected methods, and used on the Server-Side by

TSQLRestServer.URI;

- If the server do not handle Session/Authentication, transactions can be

unsafe, in a multi-client concurrent architecture.

Therefore, for performance reasons in a multi-client environment, it's

mandatory to release a transaction (via commit or rollback) as soon as

possible.

Authentication schemes

Class-driven authentication

Authentication is implemented in mORMot via the following

classes:

| class | Scheme |

TSQLRestServerAuthenticationDefault | mORMot secure authentication scheme, based on a proprietary

dual-pass challenge and SHA-256 hashing |

TSQLRestServerAuthenticationSSPI | Windows authentication, via the logged user |

TSQLRestServerAuthenticationNone | Weak but simple authentication, based on user name |

All those classes will identify a TSQLAuthUser record from a

user name. The associated TSQLAuthGroup is then used later for

authorization.

You can add you own custom authentication scheme by defining your own class,

inheriting from TSQLRestServerAuthentication.

By default, no authentication is performed.

If you set the aHandleUserAuthentication parameter to

true when calling the constructor

TSQLRestServer.Create(), both default secure mORMot

authentication and Windows authentication are available. In fact, the

constructor executes the following:

constructor TSQLRestServer.Create(aModel: TSQLModel; aHandleUserAuthentication: boolean);

(...)

if aHandleUserAuthentication then// default mORMot authentication schemesAuthenticationRegister([TSQLRestServerAuthenticationDefault,TSQLRestServerAuthenticationSSPI]);

(...)

In order to define one or several authentication scheme, you can call the

AuthenticationRegister() and

AuthenticationUnregister() methods of

TSQLRestServer.

mORMot secure RESTful authentication

The TSQLRestServerAuthenticationDefault class implements a

proprietary but secure RESTful 18.

Here are the typical steps to be followed in order to create a new user

session via mORMot authentication scheme:

- Client sends a GET ModelRoot/auth?UserName=... request to the

remote server;

- Server answers with an hexadecimal nonce contents (valid for about 5

minutes), encoded as JSON result object;

- Client sends a GET

ModelRoot/auth?UserName=...&PassWord=...&ClientNonce=... request

to the remote server, in which ClientNonce is a random value used

as Client nonce, and PassWord is computed from the log-on

and password entered by the User, using both Server and Client nonce

as salt;

- Server checks that the transmitted password is valid, i.e. that its matches

the hashed password stored in its database and a time-valid Server

nonce - if the value is not correct, authentication fails;

- On success, Server will create a new in-memory session and return the session

number and a private key to be used during the session (encoded as JSON result

object);

- On any further access to the Server, a &session_signature=

parameter is added to the URL, and will be checked against the valid sessions

in order to validate the request.

Query Authentication is handled at the Client side in

TSQLRestClientURI.SessionSign method, by computing the

session_signature parameter for a given URL, according to the

TSQLRestServerAuthentication class used.

In order to enhance security, the session_signature parameter

will contain, encoded as 3 hexadecimal 32 bit cardinals:

- The Session ID (to retrieve the private key used for the signature);

- A Client Time Stamp (in 256 ms resolution) which must be greater or equal

than the previous time stamp received;

- The URI signature, using the session private key, the user hashed password,

and the supplied Client Time Stamp as source for its crc32 hashing

algorithm.

Such a classical 3 points signature will avoid most

man-in-the-middle (MITM) or re-play attacks.

Here is typical signature to access the root URL

root?session_signature=0000004C000F6BE365D8D454

In this case, 0000004C is the Session ID, 000F6BE3

is the client time stamp (aka nonce), and 65D8D454 is the

signature, checked by the following Delphi expression:

(crc32(crc32(fPrivateSaltHash,PTimeStamp,8),pointer(aURL),aURLlength)=aSignature);

For instance, a RESTful GET of the TSQLRecordPeople table with

RowID=6 will have the following URI:

root/People/6?session_signature=0000004C000F6DD02E24541C

For better Server-side performance, the URI signature will use fast

crc32 hashing method, and not the more secure (but much slower)

SHA-256. Since our security model is not officially validated as a standard

method (there is no standard for per URI authentication of RESTful

applications), the better security will be handled by encrypting the whole

transmission channel, using standard HTTPS with certificates signed by a

trusted CA, validated for both client and server side. The security involved by

using crc32 will be enough for most common use. Note that the password

hashing and the session opening will use SHA-256, to enhance security with no

performance penalty.

In our implementation, for better Server-side reaction, the

session_signature parameter is appended at the end of the URI, and

the URI parameters are not sorted alphabetically, as suggested by the reference

article quoted above. This should not be a problem, either from a Delphi Client

or from a AJAX / JavaScript client.

On practice, this scheme is secure and very fast, perfect for a Delphi

client.

Authentication using Windows credentials

By default, the hash of the user password is stored safely on the

server side. This may be an issue for corporate applications, since a new user

name / password pair is to be defined by each client, which may be

annoying.

Since revision 1.18 of the framework, mORMot is able to use

Windows Authentication to identify any user. That is, the user does

not need to enter any name nor password, but her/his Windows credentials, as

entered at Windows session startup, will be used.

If the SSPIAUTH conditional is defined (which is the default),

any call to TSQLRestClientURI.SetUser() method with a void

aUserName parameter will try to use current logged name and

password to perform a secure Client-Server authentication. It will in fact call

the class function

TSQLRestServerAuthenticationSSPI.ClientSetUser() method.

In this case, the aPassword parameter will just be ignored.

This will be transparent to the framework, and a regular session will be

created on success.

Only prerequisite is that the TSQLAuthUser table shall contain

a corresponding entry, with its LogonName column equals to

'DomainNameUserName' value. This data row won't be created

automatically, since it is up to the application to allow or disallow access

from an authenticated user: you can be member of the domain, but not eligible

to the application.

Weak authentication

The TSQLRestServerAuthenticationNone class can be used if you

trust your client (e.g. via a https connection). It will implement

a weak but simple authentication scheme.

Here are the typical steps to be followed in order to create a new user

session via this authentication scheme:

- Client sends a GET ModelRoot/auth?UserName=... request to the

remote server;

- Server checks that the transmitted user name is valid, i.e. that it is

available in the TSQLAuthGroup table - if the value is not

correct, authentication fails

- On success, Server will create a new in-memory session and returns the

associated session number (encoded as hexadecimal in the JSON result

object);

- On any further access to the Server, a &session_signature=

parameter is to be added to the URL with the correct session ID, and will be

checked against the valid sessions in order to validate the request.

For instance, a RESTful GET of the TSQLRecordPeople table with

RowID=6 will have the following URI:

root/People/6?session_signature=0000004C

Here is some sample code about how to define this authentication scheme:

// on the Server side:

Server.AuthenticationRegister(TSQLRestServerAuthenticationNone);

...

// on the Client side:

TSQLRestServerAuthenticationNone.ClientSetUser(Client,'User');

The performance benefit is very small in comparison to

TSQLRestServerAuthenticationDefault, so should not be used for

Delphi clients.

Clients authentication

Client interactivity

Note that with this design, it's up to the Client to react to an

authentication error during any request, and ask again for the User pseudo and

password at any time to create a new session. For multiple reasons (server

restart, session timeout...) the session can be closed by the Server without

previous notice.

In fact, the Client should just use create one instance of the

TSQLRestClientURI classes as presented in 6, then call the

SetUser method as such:

Check(Client.SetUser('User','synopse')); // use default userThen an event handled can be associated to the TSQLRestClientURI.

OnAuthentificationFailed property, in order to ask the user to enter its

login name and password:

TOnAuthentificationFailed = function(Retry: integer;

var aUserName, aPassword: string): boolean;

Of course, if Windows Authentication is defined (see above), this

event handler shall be adapted as expected. For instance, you may add a custom

notification to register the corresponding user to the

TSQLAuthUser table.

Authentication using AJAX

Some working JavaScript code has been published in our forum by a framework

user (thanks, "RangerX"), which implements the authentication schema as

detailed above. It uses jQuery, and HTML 5

LocalStorage, not cookies, for storing session information on the

Client side.

See http://synopse.info/forum/viewtopic.php?pid=2995#p2995

The current revision of the framework contains the code as expected by this

JavaScript code - especially the results encoded as 2 objects.

In the future, some "official" code will be available for such AJAX clients.

It will probably rely on pure-pascal implementation using such an

Object-Pascal-to-JavaScript compiler - it does definitively make sense

to have Delphi-like code on the client side, not to break the ORM design. For

instance, the Open Source DWS (DelphiWebScript) compiler

matches our needs - see http://delphitools.info/tag/javascript

Authorization

Per-table access rights

Even if authentication is disabled, a pointer to a

TSQLAccessRights record, and its GET / POST / PUT /

DELETE fields, is sent as a member of the parameter to the unique access

point of the server class:

procedure TSQLRestServer.URI(var Call: TSQLRestServerURIParams);

This will allow checking of access right for all CRUD operations, according

to the table invoked. For instance, if the table TSQLRecordPeople

has 2 as index in TSQLModel.Tables[], any incoming

POST command for TSQLRecordPeople will be allowed

only if the 2nd bit in RestAccessRights^.POST field is set, as

such:

case URI.Method of

mPOST: begin// POST=ADD=INSERTif URI.Table=nil then begin

(...)

end else// here, Table<>nil and TableIndex in [0..MAX_SQLTABLES-1]if not (URI.TableIndex in Call.RestAccessRights^.POST) then// check UserCall.OutStatus := HTML_FORBIDDEN else

(...)

Making access rights a parameter allows this method to be handled as pure

stateless, thread-safe and session-free, from the bottom-most level of the

framework.

On the other hand, the security policy defined by this global parameter does

not allow tuned per-user authorization. In the current implementation, the

SUPERVISOR_ACCESS_RIGHTS constant is transmitted for all handled

communication protocols (direct access, GDI messages, named pipe or HTTP). Only

direct access via TSQLRestClientDB will use

FULL_ACCESS_RIGHTS, i.e. will have AllowRemoteExecute

parameter set to true.

The light session process, as implemented by 18, is used to override the

access rights with the one defined in the

TSQLAuthGroup.AccessRights field.

Be aware than this per-table access rights depend on the table order as

defined in the associated TSQLModel. So if you add some tables to

your database model, please take care to add the new tables after the

existing. If you insert the new tables within current tables, you will need to

update the access rights values.

Additional safety

A AllowRemoteExecute: TSQLAllowRemoteExecute field has been

made available in the TSQLAccessRights record to tune remote

execution, depending on the authenticated user.

It adds some options to tune the security policy.

SQL remote execution

In our RESTful implementation, the POST command with no table associated in

the URI allows to execute any SQL statement directly.

This special command should be carefully tested before execution, since SQL

misuses could lead into major security issues. Such execution on any remote

connection, if the SQL statement is not a SELECT, is unsafe. In fact, if it may

affect the data content.

By default, for security reasons, this AllowRemoteExecute field

value in SUPERVISOR_ACCESS_RIGHTS constant does not include

reSQL. It means that no remote call will be allowed but for safe

read-only SELECT statements.

Another possibility of SQL remote execution is to add a

sql=.... inline parameter to a GET request (with optional paging).

The reUrlEncodedSQL option is used to enable or disable this

feature.

Last but not least, a WhereClause=... inline parameter can be

added to a DELETE request. The reUrlEncodedDelete option is used

to enable or disable this feature.

You can change the default safe policy by including reSQL,

reUrlEncodedSQL or reUrlEncodedDelete in the

TSQLAuthGroup.AccessRights field if an authentication user

session. But since remote execution of any SQL statements can be unsafe, we

recommend to write a dedicated server-side service (method-based or

interface-based) to execute such statements.

Service execution

The reService option can be used to enable or unable the

interface-based services feature of mORMot.

In addition to this global parameter, you can set per-service and per-method

security via dedicated methods.

For method-based services, if authentication is enabled, any method

execution will be processed only for signed URI.

You can use TSQLRestServer.ServiceMethodByPassAuthentication()

to disable the need of a signature for a given service method - e.g. it is the

case for Auth and TimeStamp standard method

services.

Feedback is welcome on our

forum, as usual.